Deep learning is an area of machine learning that requires upgraded hardware to be able to process data through multiple layers in a neural network to train a model.

GPU’s (Graphics processing unit) process that data much more efficiently because they have more logical cores and can run in parallel, not only training larger datasets but in less time than a CPU, with higher accuracy.

For Nvidia GPU’s, you can include leverage CUDA and the cuDNN library. The cuDNN library is a GPU accelerator that handles standard routines for GPU performance tuning.

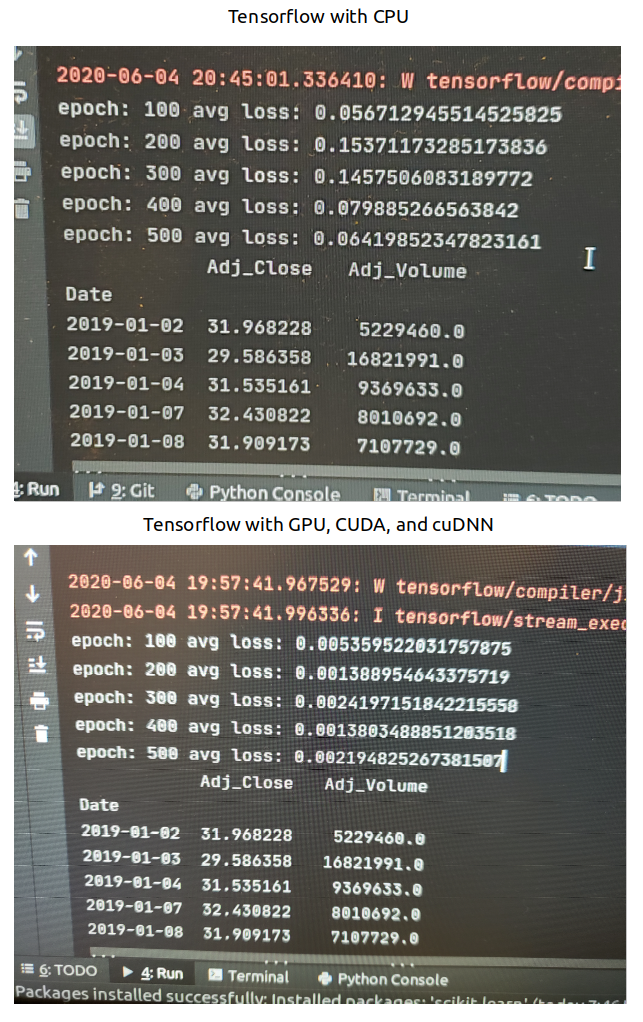

Here is an example of running the same LSTM model forecasting the stock price n days out, with the same dataset, on a CPU versus a GPU.

Average loss aka squared error aka how wrong the model is – this shows in orders of magnitude how much more accurate the GPU was in forecasting the stock price.

Accelerated Computing with CUDA

Besides the cuDNN library within CUDA, there are other areas that can be optimized for performance. Nvidia offers education and training to be able to compile CUDA kernels and GPU memory management techniques to accelerate applications.

Next steps will be going through the courses to optimize the performance through other coding libraries such as Numba and implementing other techniques to train the models more efficiently, with higher accuracy, and with low latency.